I really enjoy interacting with the SQL community on Twitter. Whether it is exchanging silly one line, sharing interesting articles and blog posts, or trying to answer a tricky problem, the conversation is always engaging. One such conversation that happened last week was a community member who was struggling with the output he wanted out of Invoke-SqlCmd. I was able to help him out, but the conversation got me thinking about how people use this cmdlet within their scripts and processes.

The focal point of the confusion is how Invoke-SqlCmd relates to sqlcmd.exe. Sqlcmd has been around since SQL 2005, replacing the antiquated osql executable. Many administrators have gotten used to the robustness of sqlcmd and how they can leverage it for their backend automation. I’ve used sqlcmd for many tasks over the years.

Then along came Powershell and Invoke-SqlCmd. This cmdlet, included with the SQL Server provider, is intended to cover a lot of the functionality that an administrator can find in sqlcmd, but now as part of Powershell. What people get hung up on is that Invoke-SqlCmd is not a direct replacement of sqlcmd and that it can’t (and shouldn’t) be used in the same way.

Thinking Differently

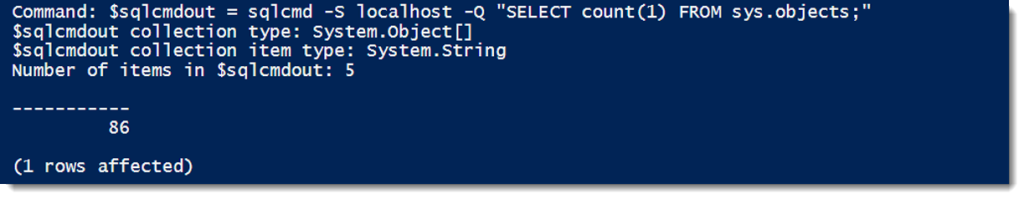

The challenge when thinking about sqlcmd versus Invoke-SqlCmd focuses on outputs. One of the cardinal rules of Powershell is that everything is an object. When comparing sqlcmd with Invoke-SqlCmd, this difference becomes quite stark. First, let’s look at sqlcmd’s output, capturing it into a variable and then examining the types that make up the output:

Using the GetType() method, we can see that the collected output of sqlcmd is an array, with each element of the array being a string. What this means is that that each line of output from sqlcmd (including the header and the row count output) is a single string, with all column elements concatenated into that string. As you can imagine, this is clumsy to handle and would require a lot of ugly string parsing to separate out the elements.

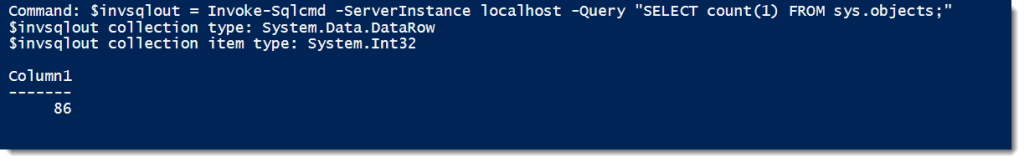

Now let’s look at the same command using Invoke-Sqlcmd:

Right off the bat, the output is noticeably different. Our total output object is now a DataRow, with the individual column being an Int32 type. You’ll note that there is no item count as well. This is because we have a singular object as output (which has no count or length properties). If we further investigate the output using Get-Member, we’ll find that the columns of our query are actual properties of the output object (in this case, Column1 since we didn’t name our column).

A New Perspective

This helps us because we can now start treating our outputs as data, not just a mass of text that has to be parsed. For example, let’s consider the following query:

SELECT

SERVERPROPERTY('InstanceName') as InstanceName

,SERVERPROPERTY('MachineName') as MachineName

,SERVERPROPERTY('ProductVersion') as SQLVersion

,SERVERPROPERTY('Edition') as SQLEdition

,SERVERPROPERTY('ProductLevel') as SQLReleaseLevel

If you want to make use of these values in Powershell and use sqlcmd, you would have to perform all sorts of string manipulations to separate out the values. To make it easy, we’d likely have each value a separate call to sqlcmd.exe. Using Invoke-SqlCmd changes the game, allowing you to easily access each column as a property of your output:

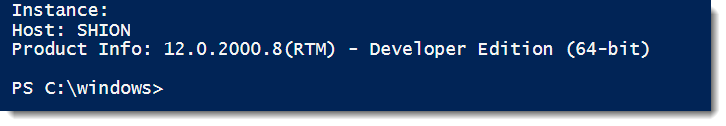

$sql=@"

SELECT

SERVERPROPERTY('InstanceName') as InstanceName

,SERVERPROPERTY('MachineName') as MachineName

,SERVERPROPERTY('ProductVersion') as SQLVersion

,SERVERPROPERTY('Edition') as SQLEdition,SERVERPROPERTY('ProductLevel') as SQLReleaseLevel

"@

$sqlstats = Invoke-Sqlcmd -Query $sql

cls

'Instance: ' + $sqlstats.InstanceName

'Host: ' + $sqlstats.MachineName

'Product Info: ' + $sqlstats.SQLVersion +'('+$sqlstats.SQLReleaseLevel+') - ' + $sqlstats.SQLEdition

This ability to reference columns as properties gets even more powerful when we deal with larger data sets for our scripts. A common technique I use is to leverage a collection of databases to drive work I need to do. With my collection as a series of objects, each row element is easier to access and make use of for my processing. To see a detailed example of this, run the following commands and study the output of the Get-Member call:

$dbs = Invoke-Sqlcmd -Query 'select name,recovery_model_desc,page_verify_option_desc from sys.databases;' $dbs | Get-Member

Data Driven Decisions

Invoke-SqlCmd lets you integrate your data with your scripts. When you make your outputs discrete objects, data becomes readily accessible for your automation. It allows you to move those clunky parts of your processing out of T-SQL and into Powershell, making your scripts more robust. You can use T-SQL for what T-SQL is good at, allowing Powershell to take over where appropriate.

This does not replace sqlcmd. Another key difference is Invoke-SqlCmd isn’t interactive, where sqlcmd is. If you have need of a command line tool for using SQL Server (you’re on Server Core or you need an emergency connection), sqlcmd will be your weapon choice.

The fuzzy area is around compiled SQL scripts you might use. Both Invoke-SqlCmd and sqlcmd.exe can accept these scripts and allow you to perform sqlcmd’s variable replacement for parameterizing scripts. Choosing which method you use, in my mind, comes back to outputs. If your script output is simply a matter of logging the entire execution of your script for later review, sqlcmd is a perfectly acceptable method. However, if you need to integrate your scripts into a larger process and make use of the that output within the script execution, Invoke-SqlCmd could be your better option.

Powershell is about options. Everyone has their own methods and patterns, the idea behind Powershell scripting is not to give you a one-size-fits-all solution, but to provide you the components for building your scripts. The challenge we all have is to make sure we’re using the right components in the right way, the proverbial “driving a screw with a hammer” problem. When presented with a new tool set, don’t try and use it the same way as other tools you’ve had in the past. Instead, learn the tool on its own so you can make the best use of it for your process.

I’m tweeting!

I’m tweeting!