Last week, as part of T-SQL Tuesday 68, I introduced you to two Powershell functions for validating and setting your SQL Server configurations. While useful, they depend on some sort of configuration source, which begs the question: How do we get this source? This post covers the third function that will help you create your configuration source, using an existing server’s values and allowing you to use them directly or modify them as you see fit.

Get-SQLConfiguration

The concept of Get-SQLConfiguration is simple: Get the sys.configuration values of a SQL Server instance and export them as a hash table that can be used by the other functions. The additional criterion to consider is that Test-SQLConfiguration and Set-SQLConfiguration both use the SMO properties to do this, so our configuration source must also use these names. The result is a function that uses the SMO to perform its export:

function Get-SQLConfiguration{

param([string]$InstanceName='localhost'

,[string[]] $Filter

)

$smosrv = new-object ('Microsoft.SqlServer.Management.Smo.Server') $InstanceName

$output = @()

if($Filter){

$configs = $smosrv.Configuration | Get-Member -MemberType Properties | Where-Object {$Filter.Contains($_.Name)}

}

else{

$configs = $smosrv.Configuration | Get-Member -MemberType Properties | Where-Object {$_.Name -ne 'Properties'}

}

foreach($config in $configs){

$output += New-Object PSObject -Property ([Ordered]@{'Name'=$config.Name;

'DesiredValue'=$smosrv.Configuration.$($config.Name).RunValue})

}

return $output

}

The function itself is not complex, but I did want to add one other feature to it. A simple export might be overwhelming, considering the number of configuration properties within SQL Server. Most of these will actually be left at their defaults. To aid us, I also added a Filter parameter that accepts an array of SMO configuration property names and will only export those properties.

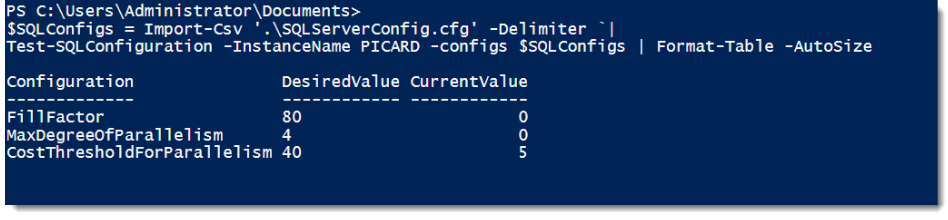

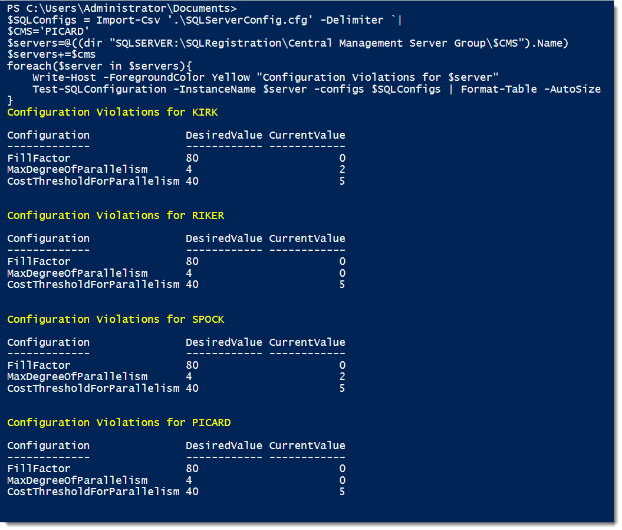

There are a couple patterns to use this function. The simplest is to just run it for one server and use the output to check another:

$configurations=Get-SQLConfiguration -InstanceName PICARD Test-SQLConfiguration -InstanceName RIKER -Configs $configurations

This call will essentially compare and find the differences between the two instances. If we wanted to get more specific, we could use our Filter parameter to only compare one or two configurations:

$configurations=Get-SQLConfiguration -InstanceName PICARD -Filter @('FillFactor')

Test-SQLConfiguration -InstanceName RIKER -Configs $configurations

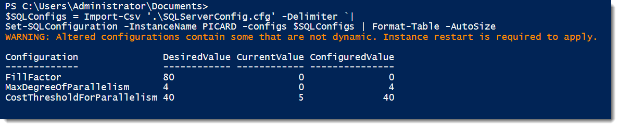

It is also possible to store and manage these configurations by using the Get-SQLConfiguration function. Because we’re working with hash tables, we have a variety of storage options. The easiest to get started is to use the built in Export-CSV function and save our configurations as a delimited file (I like pipe, but commas will do):

Get-SQLConfiguration -InstanceName PICARD | Export-Csv -Path .\PicardConfig.cfg -NoTypeInformation

With the text file that is generated, we can go in and edit our configs, removing what we want and editing values we need to update. The result can then be stored in source control for tracking and audit purposes. We could even go as far as to load the configurations into a database table and call them from a central administrative server. There are a lot of possibilities.

The Code

While I’ve published the functions in these two blog posts, there’s an easier way to get ahold of them. These are part of my SQLConfiguration module published on GitHub, which you can download and use (along with my other SQL configuration functions). Please keep in mind this module and the code contained within should still be considered a work in progress and the functions are provided as is. The usual disclaimers apply, so be careful using it and test it thoroughly before making regular use of it. Of course, if you do find any issues, I would love to know about them so I can review and update my code.

There are many additional items that can be added to this. The largest that comes to mind is dynamic configuration values, as these functions consider your configs to be static. Items like minimum memory, maximum memory, and maximum degree of parallelism depend on the hardware configuration and there are various ways to calculate these values. There are also configurations not stored in this part of SQL Server, such as default database directories and SQL Server agent settings. These functions have plenty of room for enhancement. They’re a good start, however, to giving you additional tools to manage your environment, helping to make your SQL Server deployments more consistent and reliable.

I’m tweeting!

I’m tweeting!