I’ve been super busy lately, but I wanted to at least post something for this month’s T-SQLTuesday. The topic is about encouraging new speakers, something I’m very passionate about. I think that speaking is one of the best things you can do to boost your career. If you are reading this and are considering speaking, I encourage you to reach out to your local user group and see if you can get started with a 15 or 30 minute session. Take a shot, I’ll bet you’ll be surprised.

I’ve been super busy lately, but I wanted to at least post something for this month’s T-SQLTuesday. The topic is about encouraging new speakers, something I’m very passionate about. I think that speaking is one of the best things you can do to boost your career. If you are reading this and are considering speaking, I encourage you to reach out to your local user group and see if you can get started with a 15 or 30 minute session. Take a shot, I’ll bet you’ll be surprised.

What I want to share are some tips for the day you give your first presentation. A lot of folks are going to talk to you about building and preparing your presentation, but that is only half the battle. What you should do when you actually GIVE the presentation is often glossed over, even though this is the most high pressure moment of the whole cycle.

How do we reduce or relieve some of this pressure? Well, let’s start with a list of things that could possibly go wrong when you present. Think about the following list:

- You’re presenting and you get an on-call page.

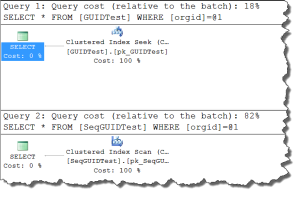

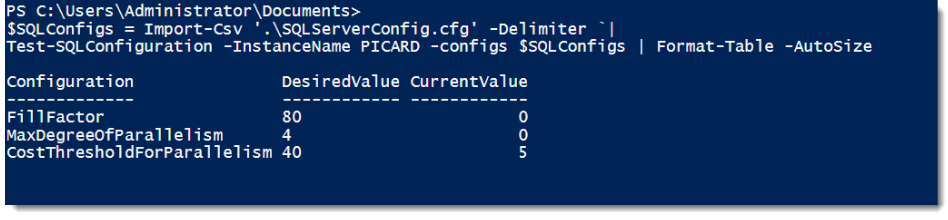

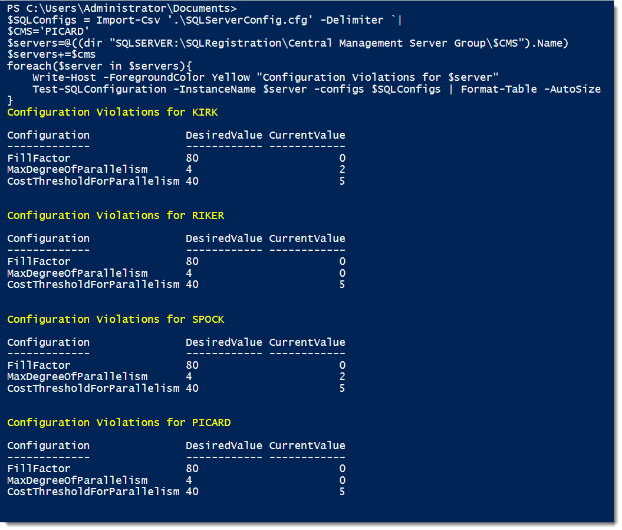

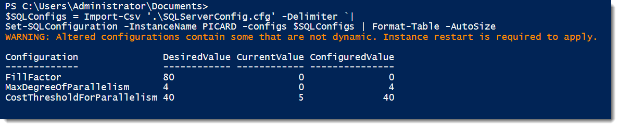

- Your demo blows up spectacularly.

- While giving your presentation, your computer attempts to apply updates.

- You start 10 minutes late because you have issues with your video or sound.

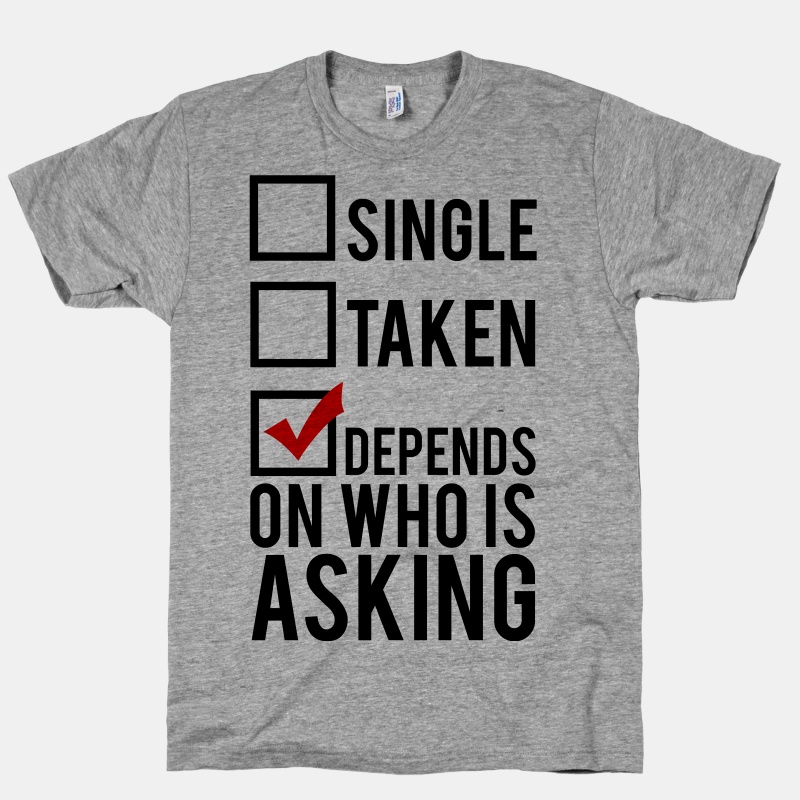

- During your presentation, someone sends you a picture on your favorite IM client:

Any of these will easily throw an experienced presenter off their game. For a new speaker, it can spell disaster. I’ve got a routine that I go through on the day of my presentation, which is designed to reduce that risk of disaster. And who doesn’t like reduced risk?

Getting Ready

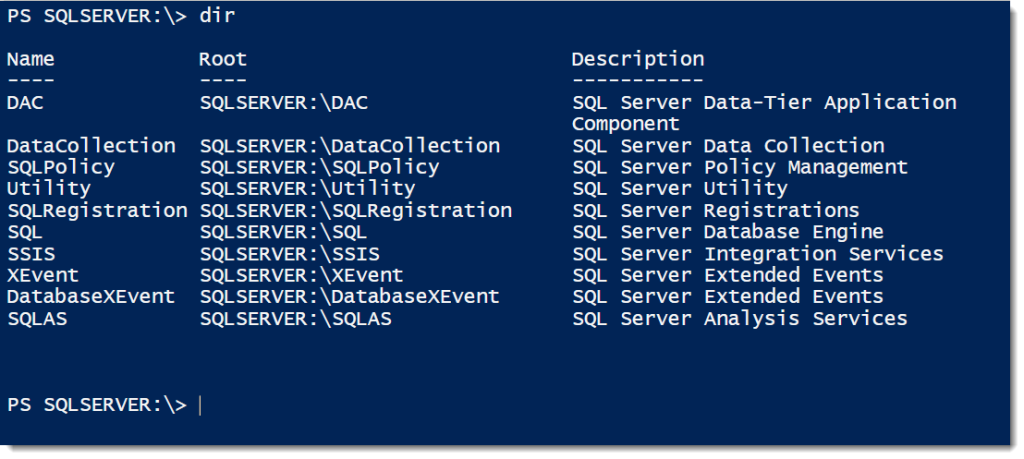

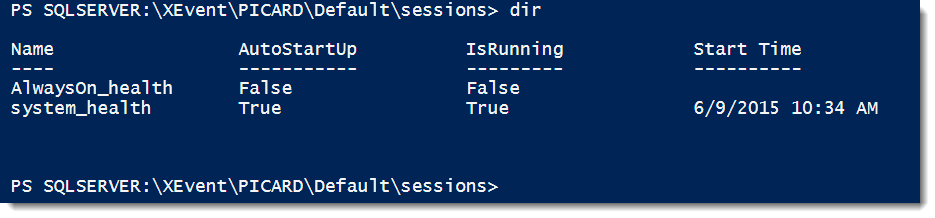

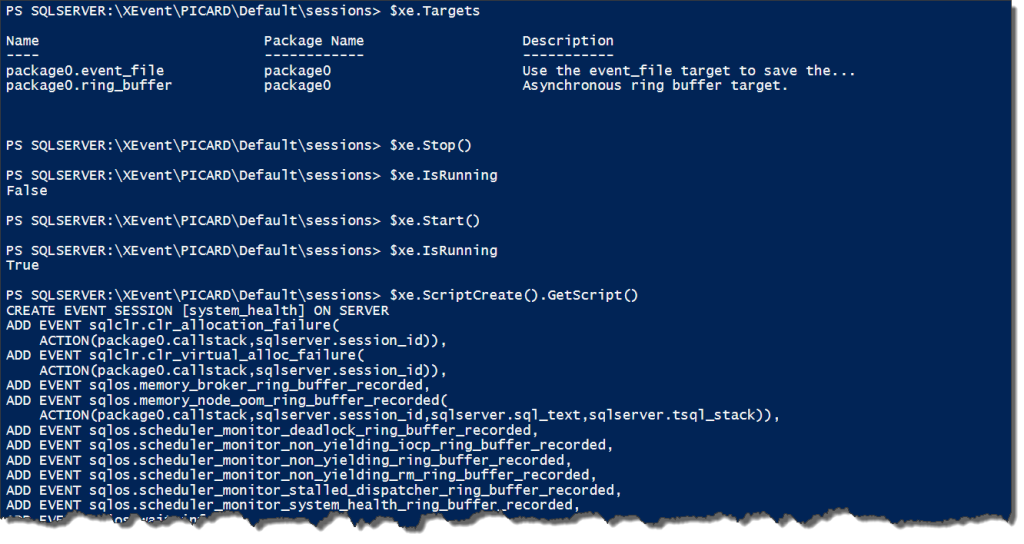

Step 1: At the beginning of the day, well before my presentation, I make sure my presentation machine has been updated with Windows and other corporate software. This is SUPER important if it’s a Tuesday (when Microsoft releases updates). Doing this avoids any update surprises while I’m presenting or right before I go on stage.

Step 2: A couple of hours or so before my presentation, I will walk through my presentation. I open up the PowerPoint slide deck and step through it. When I get to demos, I will walk through my demo scripts. I test EVERYTHING, and do it in order. If I encounter an error, fix it, and then start over. This helps me insure that the flow works and that I understand what the step dependencies are in my demo.

Step 3: About an hour before my presentation, I will turn off everything on my presentation machine unnecessary to the presentation. Programs like Skype, Google, unneeded local SQL instances, Virtual Machines….so on and so forth. I only want what I need running to make sure that I have enough resources for my demos, along with keeping possible distractions shut down.

Step 4: At least 15 minutes before I’m due to present, I go to my room and hook up my presentation machine. I test the video and make sure my adapter works. This way I can address any tech issues that could hamper the presentation. I will display PowerPoint and also my scripts and demos to make sure everything looks ok.

I also usually duplicate my screen to the projector. This is important because if I extend, this means the only way (typically) that I can see what’s on my screen is to look back at it. This is distracting for your audience. If you duplicate, you only have to look down at your screen, which maintains contact with the audience.

Step 5: Right before I present, I turn my phone OFF. Then I put it in my bag. I get it away from me. I don’t want to get calls, I don’t want to have to worry about silencing it, and I don’t want it buzzing in my pocket if I’ve got a lot of notifications. The phone is off and away.

It’s GO time

At this point, I’m free and clear to do my presentation. Does that mean that nothing will go wrong? Of course not. However, performing these steps puts me in the best position to give my presentation without disruption. It is a foundation for success. Just like we build out our database solutions to minimize the option of failure, we need to approach our presentations with a similar sort of care to help guarantee our own success.

I want to thank Andy Yun(@sqlbek) for hosting this month’s T-SQL Tuesday. It’s a great topic and I hope folks get a lot out of it. If you’re considering stepping into the speaking world (and you should!), this month’s blog posts should give you all the tools to succeed. Good luck!

I’m tweeting!

I’m tweeting!