Twitter. It can be annoying, it can be frivolous, but you’d be surprised at how many times it gives me a blog topic. This time around, I was having a back and forth with some folks about Invoke-SqlCmd. I had recommended someone try using it, which was followed by a lot of people griping about the cmdlet while suggesting other community tools or scripts should be used.

While I understand where a lot of these folks come from, I wanted to share a little, in a longer form, about why I use and recommend Invoke-SqlCmd. I think it still gets a bad rap from the PowerShell power users for some of its…quirks. I want to stand up for this handy little cmdlet, which I think is likely the most useful part of the SqlServer PowerShell module.

The .NET Root

When we start talking about Invoke-SqlCmd, what are we trying to do? At the core, PowerShell scripters need something to execute SQL commands within the context of a .ps1 script. I often speak of how PowerShell is a framework where you can tie different parts of the Windows stack together, so this ability to run SQL is needed.

One of the strongest elements of PowerShell is that it is built on .NET, which means we can always use .NET code and objects. In the case of SQL, we can use traditional ADO .NET code to execute SQL against a SQL Server instance:

$Datatable = New-Object System.Data.DataTable $Connection = New-Object System.Data.SQLClient.SQLConnection $Connection.ConnectionString = "server=localhost;database=AdventureWorks2012;trusted_connection=true;" $Connection.Open() $Command = New-Object System.Data.SQLClient.SQLCommand $Command.Connection = $Connection $Command.CommandText = 'select top 10 BusinessEntityID,PersonType,FirstName,LastName from person.Person' $DataAdapter = new-object System.Data.SqlClient.SqlDataAdapter $Command $Dataset = new-object System.Data.Dataset $DataAdapter.Fill($Dataset) $Connection.Close() $Dataset.Tables[0]

As you can see, this is pretty verbose. We have to create a connection object and set its command string, then open the connection, execute the SQL, populate the data set, close the connection, then finally return the data set. No matter how you slice it, this is a lot of code for a simple SELECT statement.

SMOing it up

To avoid this verbosity, most programmers wouldn’t rely on the raw .NET unless they absolutely needed to. Instead, they would use existing libraries (or write their own if they had to). This is where the SQL Server Management Objects (SMO) come into play. I’ll skip the long boring explanation of what SMO is and just focus on the fact that we can use these existing libraries to simplify our call:

$smosrv = New-Object Microsoft.SqlServer.Management.Smo.Server $query = 'select top 10 BusinessEntityID,PersonType,FirstName,LastName from person.Person' $results = $smosrv.Databases['AdventureWorks2012'].ExecuteWithResults($query) $results.Tables[0]

The SMO lets us reduce twelve lines of code (ignoring whitespace) to four for our SELECT output. This is the power of using existing libraries. We don’t have to reinvent the wheel for our work,which means we can focus more of our effort on everything around the SQL statement.

But what if we could make it even easier?

Keep It Simple, Sir (or Ma’am)

Three lines of code isn’t that much. The problem is that our code is a little less intuitive. Let’s keep in mind that most PowerShell scripters are not .NET programmers. Understanding dot notation isn’t necessarily difficult, but if it doesn’t come naturally to you there’s a learning curve that you have to climb.

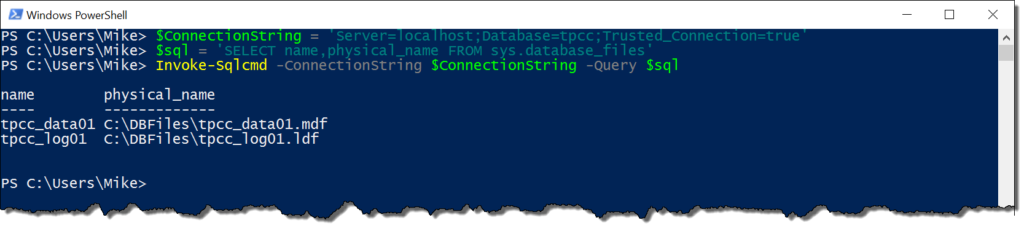

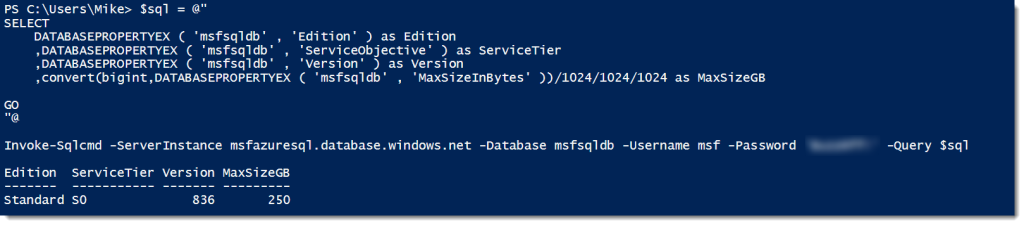

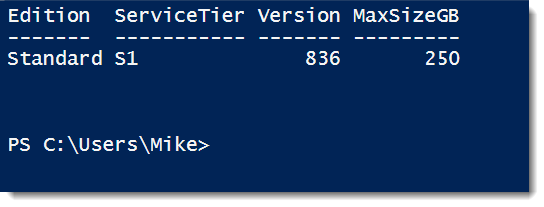

This is where Invoke-SqlCmd starts to make sense. First off, it wraps up all the functionality of the above .NET code so we can use it with the cmdlet call. Also, it follows the founding concepts of the PowerShell language, where the syntax is verbose enough to understand what you’re passing as arguments. Let’s look at our simple SELECT now using Invoke-SqlCmd:

Invoke-Sqlcmd -ServerInstance localhost -Database 'AdventureWorks2012' `

-Query 'select top 10 BusinessEntityID,PersonType,FirstName,LastName from person.Person'

If we break this down, you can see we have a clear set of syntax for calling the cmdlet. We specify an instance, a database, and a query. The output is an array of data rows, though it could be a datatable if you’re using the most current version. Most scripters are not going to need much more than that. It’s a straightforward solution to a simple problem.

So why not?

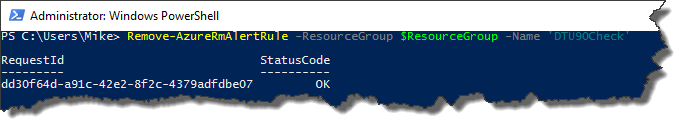

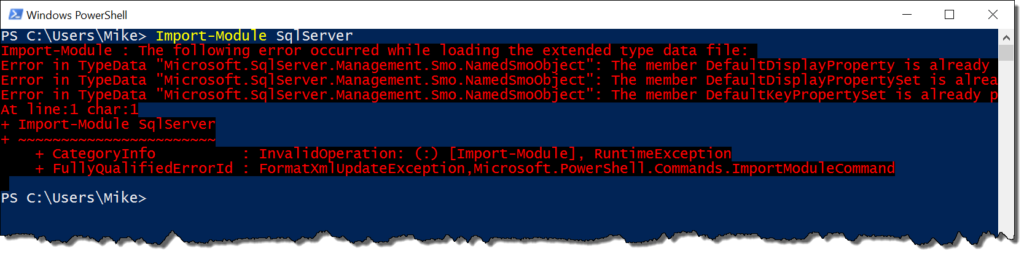

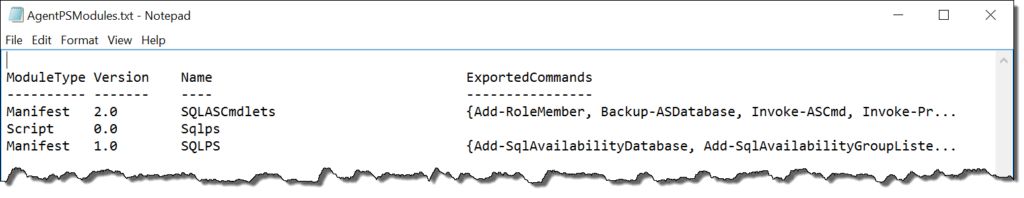

Why do people gripe so much about Invoke-SqlCmd then? Well, to understand this is to understand the history of SQLPS. For a long while, the SQL Server module for PowerShell was klunky and buggy. There were a lot of challenges with loading it and using it, such that many scripters decided to throw it out and write their own functions. In many cases, PowerShell folks would skip Invoke-SqlCmd not because it was bad, but because it came packaged with the rest of SQLPS and they wanted to avoid the entire module.

Now that the SQL Tools team has been reworking the module as SqlServer, this has become less of a concern. The module is less of a burden to load and the other components do not get in the way. There are also improvements and updates to the code to make it work better and serve more needs.

There are other reasons as well, but it usually boils down to the use case. Invoke-SqlCmd is great, but it can’t do everything. My anecdotal evidence is that the cmdlet will handle upwards of 90% of what I need it for, but there will still be edge cases. For these edge cases, we always have the more code intensive options available to us. This is why PowerShell is so great, there’s always another way, whether it’s writing more detailed code or using a community function.

To touch on this last point, this is where I also bump up against folks. There are some fantastic community functions out there. Seriously, check out DBATools if you haven’t yet. These tools enhance and expand your PowerShell experience and I make heavy use of these tools. They also have better alternatives that address the quirks of the core SqlServer module.

The rub is that you can’t always rely on community tools being available to you. Sometimes a corporate environment won’t let you install those tools or scripts. It might be that you go into a gig and they haven’t even heard of these tools. I can tell you that as consultant, I have to be very considerate of my client’s environments before I start bringing in outside code. However, because Invoke-SqlCmd is part of Microsoft’s tool set, I can rely on it being there. There are less steps and hurdles to making use of it.

Making Sense Of It All

There are a lot of reasons for using this little cmdlet. There are also reasons not to use it, depending on your situation or use case. My goal here is to not let the baby get thrown out with the proverbial bathwater. Invoke-SqlCmd is one of my favorite cmdlets in my toolbox and (most of the time) it does exactly what I need it to. Whether I’m writing scripts or working adhoc on a server, I usually don’t need a lot of fluff, just a way to execute a SQL query and sometimes get something back.

I hope you enjoyed my little love letter to Invoke-SqlCmd. I understand if it doesn’t suite your needs, but maybe you should give it another look? Or, if you’re a PowerShell newbie, you can see why using it can make your life a little easier. Wherever you go next after this blog post, I hope it is with a clearer view of how Invoke-SqlCmd can fit into your own PowerShell habits.

I’m tweeting!

I’m tweeting!