Now that I’ve gotten some of my thought pieces out of my brain, I wanted to get back to some more technical posts, starting with some simpler techniques for people trying to figure out how to use SQL Server and PowerShell together. I know that a lot of database pros are starting to understand the importance of the language, but still struggle with some practical examples of how to get started. One of my goals with this blog is to bridge that gap.

Now that I’ve gotten some of my thought pieces out of my brain, I wanted to get back to some more technical posts, starting with some simpler techniques for people trying to figure out how to use SQL Server and PowerShell together. I know that a lot of database pros are starting to understand the importance of the language, but still struggle with some practical examples of how to get started. One of my goals with this blog is to bridge that gap.

When restoring a backup, it can be tedious to restore to a new location and have to figure out your MOVE statements. If you only have one data file and one log file, it’s probably not a big deal, but it’s still annoying. Usually, the steps for me are:

- Figure out my new data and log paths.

- Run a RESTORE FILELISTONLY against the backup file to get the files.

- Write out my RESTORE WITH MOVE commands using the new paths.

- Execute

None of this is difficult, but we can still make it easier. We have an established process, so putting some PowerShell scripting around it can automate our restore to make the script building faster and more consistent.

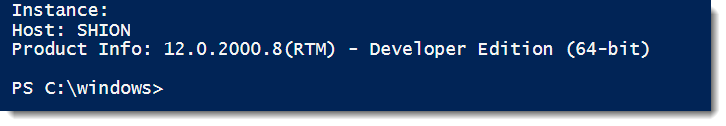

Our weapon of choice will be Restore-SqlDatabase. This workhorse cmdlet has been part of the both the old SQLPS and the new SqlServer modules. The functionality hasn’t really changed, meaning that what we go over here should work for you regardless of what module you use. I always recommend using the most recent version of the code, but don’t worry if you can’t.

The cmdlet is straightforward in its use. Fundamentally, all we need to declare is an instance, database name, and backup file. However, if we don’t declare anything else, the cmdlet will try and restore the database files to their original locations. Keep in mind this is no different than how a normal RESTORE DATABASE command works.

This is where we make our lives easier with PowerShell. First off, to move files using Restore-SqlDatabase, we need to create a collection of RelocateFile objects. Don’t let the .Net-ness of this freak you out. All we’re doing is creating something that has the logical file name and the new physical file name. In other words, it’s just an abstraction of the MOVE statement in RESTORE DATABASE.

Let’s look at some code. I’ve got a script, but I think the best way to approach it is to break it up and talk about each section individually, just to make sure we’re all on the same page. To get started, we should declare a few things: the new file locations, output of a script file, database name for the restore, backup file, and then an array we can store our RelocateFile objects in.

#Set Variables $NewDataPath = 'C:\DBFiles\Data' $NewLogPath = 'C:\DBFiles\Log' $OutputFile = '.\restore.sql' $dbname = 'AdvWorks2014' $BackupFile = 'C:\DBFiles\AdventureWorks2014.bak' $relocate = @()

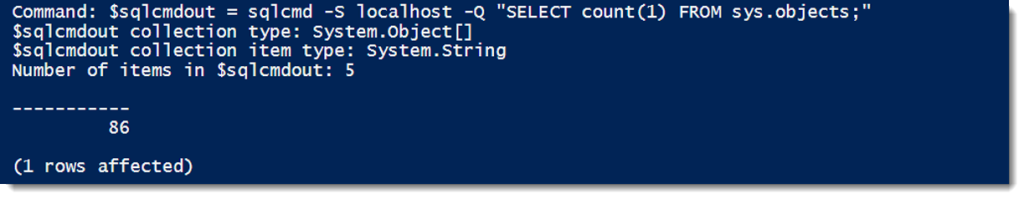

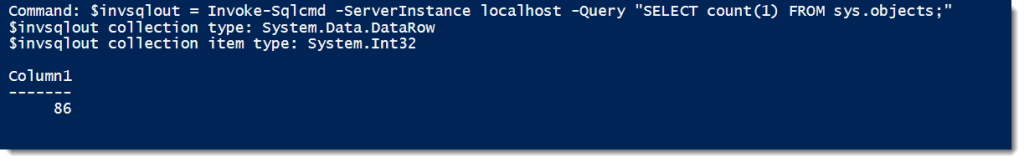

Next up is a simple RESTORE FILELISTONLY to get our file list. This needs to be done with Invoke-SqlCmd because there’s no support in Restore-SqlDatabase (or any other cmdlet) for the file list option.

#Get a list of database files in the backup $dbfiles = Invoke-Sqlcmd -ServerInstance localhost -Database tempdb -Query "RESTORE FILELISTONLY FROM DISK='$BackupFile';"

Now comes the “magic”. Our RESTORE FILELISTONLY call gives us a collection for all our files, but it’s all the old locations. We will look through this collection, do some string replacement, and create our RelocateFile objects. I want to call out the use of Split-Path -Leaf, a handy cmdlet that will separate out the different parts of a file path. By using -Leaf, the cmdlet give you only the actual file name. We can just append that value to the end of our new path (using Join-Path) and use that for creating the RelocateFile object for each file.

#Loop through filelist files, replace old paths with new paths

foreach($dbfile in $dbfiles){

$DbFileName = $dbfile.PhysicalName | Split-Path -Leaf

if($dbfile.Type -eq 'L'){

$newfile = Join-Path -Path $NewLogPath -ChildPath $DbFileName

} else {

$newfile = Join-Path -Path $NewDataPath -ChildPath $DbFileName

}

$relocate += New-Object Microsoft.SqlServer.Management.Smo.RelocateFile ($dbfile.LogicalName,$newfile)

}

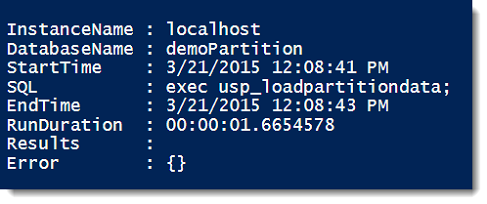

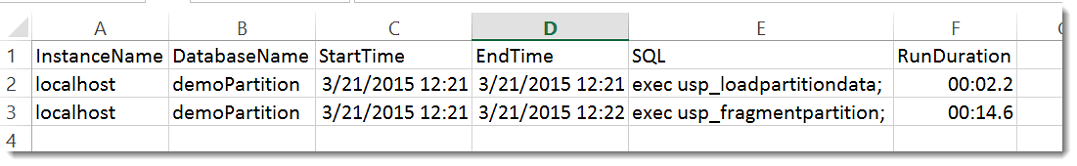

Creating the RelocateFile objects is the heavy lifting. After this, it’s just a matter of calling Restore-SqlDatabase with the right arguments. Note that I’m using the -Script argument and piping this to Out-File. We’re using PowerShell to create a SQL script, which is a pattern I like. As handy as these tools are, they don’t always get everything, so I will use scripts to create scripts and then edit the final output with whatever else I need.

#Create Restore script Restore-SqlDatabase -ServerInstance localhost ` -Database $dbname ` -RelocateFile $relocate ` -BackupFile "$BackupFile" ` -RestoreAction Database ` -Script | Out-File $OutputFile

By saving and reusing this script, I have saved myself a lot of man hours for restores. The strength here isn’t in any mystery code or magic functionality. It is simply a matter of leveraging a framework to automate an existing process.

I’ve actually taken this script and created a more formalized function with it. The core is there, but in keeping with the tooling spirit, I’ve added some additional code that validates file system paths. You can find it on my GitHub repository and you’re welcome to download and make use of it yourself.

I’m tweeting!

I’m tweeting!