Welcome to another month of T-SQL Tuesday, started by Adam Machanic(@adammachanic) and hosted this month by Andy Yun(@SqlBek). The topic for this month’s blog party is “Just say ‘NO’ to defaults!”, a call on what we have learned and how we manage SQL Server defaults in our environments. While you will probably find lots of posts out there on what you should or should not set your SQL Server configurations to, I wanted to share with you a post on some tools that can help you manage these configurations.

Management Overhead

Many database professionals, SQL Server or otherwise, learn very quickly that you don’t want to stick with your default settings. The database vendors usually try and set some general values that can apply to most situations, but these typically don’t last long in any enterprise. You probably have a set of configurations you change from the defaults whenever you install a new SQL Server instance.

The struggle, whether you have 5 SQL Servers or 500, is keeping these settings consistent. Sure, you can script out your changes (and should), but how do you manage the changes over time? What if someone changes a setting, how do you enforce your configurations? Or maybe someone else sets up an instance and doesn’t apply your scripts? It becomes an ugly problem to manage.

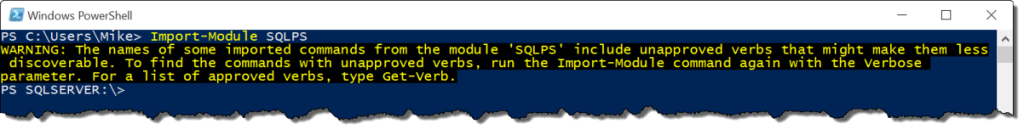

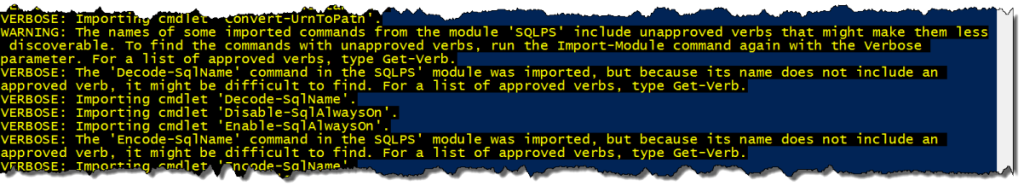

Since I’m a Powershell fan, I’m also a fan of Desired State Configuration. While the technology is still new on the scene, it’s quickly turning into an effective way to manage your server builds. When thinking about Andy’s topic and the problem of managing changes to your instance defaults, my ‘eureka’ moment was that the DSC model could easily be applied to managing these configurations. The result was three Powershell functions that provide tools you can use to control the settings in your environment.

Test-SQLConfiguration

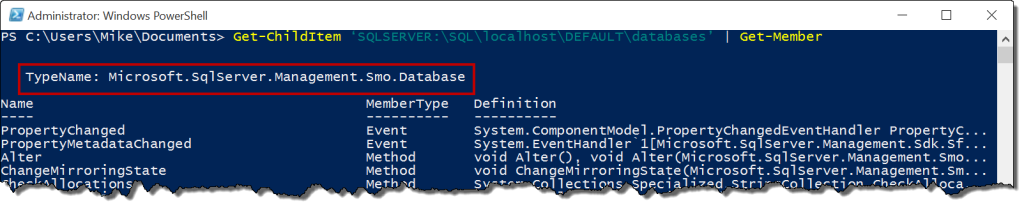

The first function I wrote was to evaluate the configurations on an instance. To do this, I started with the assumption that I’d have a hash table of Config(name) and DesiredValue(value) pairs. Each pair would be the SMO Configuration Class property and the desired value I wanted to check. Then I would simply loop through each one and, if it did not match, I would add that to an output array. The function would then return a collection of configurations that did not match my desired state.

function Test-SQLConfiguration{

param([string]$InstanceName='localhost'

,[Parameter(Mandatory=$true)][PSObject] $Configs

)

$smosrv = new-object ('Microsoft.SqlServer.Management.Smo.Server') $InstanceName

$output = @()

foreach($config in $configs){

if($config.DesiredValue -ne $smosrv.Configuration.$($config.Name).RunValue){

$output += New-Object PSObject -Property (@{'Configuration'=$config.Name;

'DesiredValue'=$config.DesiredValue;

'CurrentValue'=$smosrv.Configuration.$($config.Name).RunValue})

}

}

return $output

}

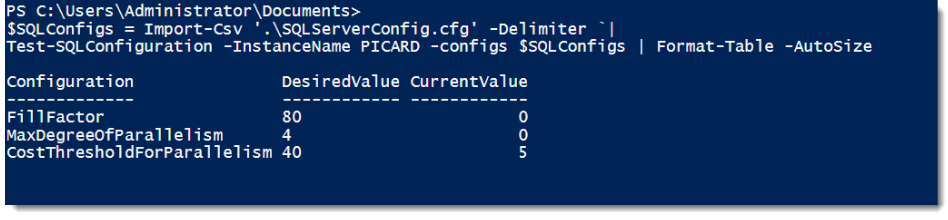

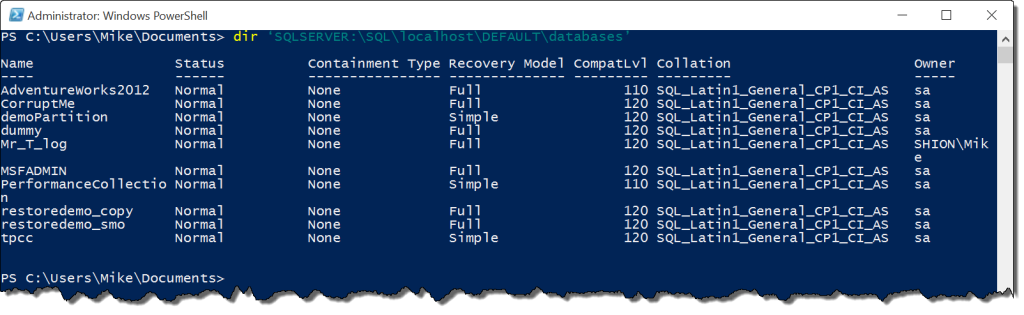

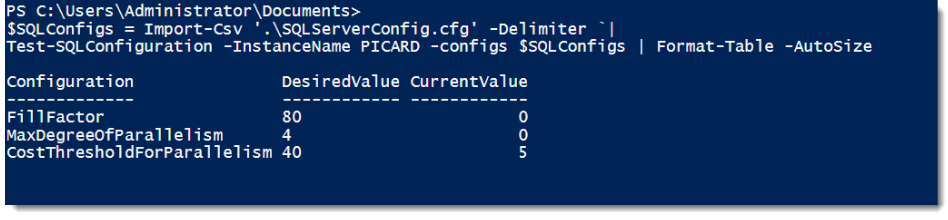

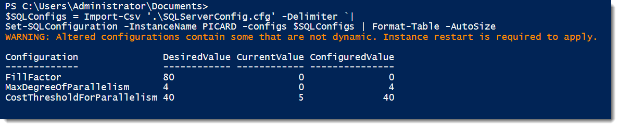

To test this, I put together a very simple pipe-delimited file of configurations I wanted to check. These configuration names had to match the SMO property names (which aren’t difficult to acquire) and the resulting file and output looks like this:

SQLServerConfig.cfg

Name|DesiredValue

FillFactor|80

MaxDegreeOfParallelism|4

CostThresholdForParallelism|40

XPCmdShellEnabled|0

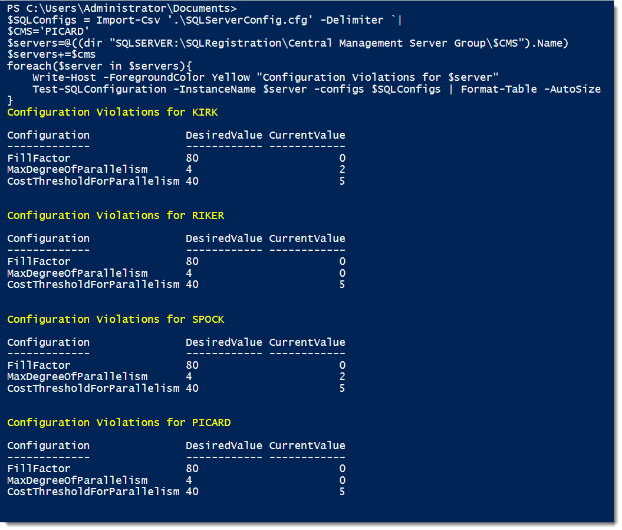

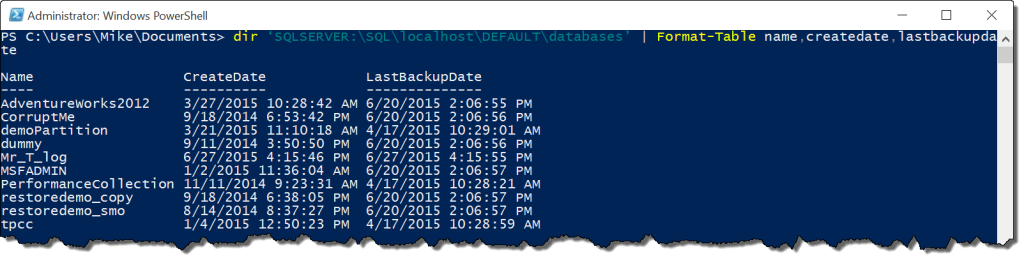

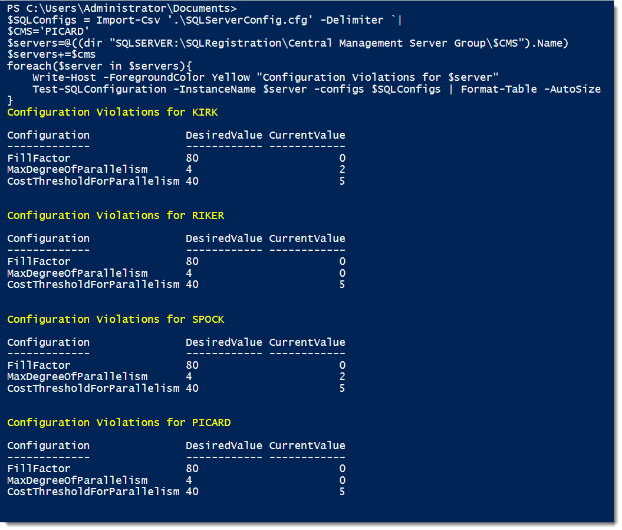

This function provides a quick view of which SQL Server configurations don’t match my desired values. What’s cool is now I can then take this function and easily run it across my entire enterprise.

With this function, I’ve removed the burden of validating my SQL Server instance configurations. Since the output is an object, there’s many flexible options for reporting and collecting the information. Writing this to a text file is a snap or uploading it to a database table for ongoing auditing.

Set-SQLConfiguration

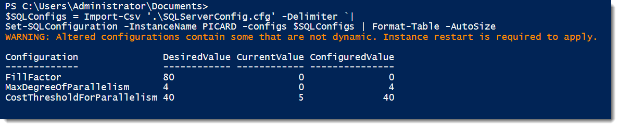

The next step, after we’ve checked configurations, is to correct the violations. This next function works much like Test-SQLConfiguration and takes the same two parameters as Test-SQLConfiguration. The difference is that the function will now alter the value and then reconfigure the instance to apply the change.

function Set-SQLConfiguration{

param([string]$InstanceName='localhost'

,[Parameter(Mandatory=$true)][PSObject] $Configs

)

$smosrv = new-object ('Microsoft.SqlServer.Management.Smo.Server') $InstanceName

$output = @()

foreach($config in $configs){

if($config.DesiredValue -ne $smosrv.Configuration.$($config.Name).RunValue){

$row = New-Object PSObject -Property (@{'Configuration'=$config.Name;

'DesiredValue'=$config.DesiredValue;

'CurrentValue'=$smosrv.Configuration.$($config.Name).RunValue})

$smosrv.Configuration.$($config.Name).ConfigValue = $Config.DesiredValue

$smosrv.Configuration.Alter()

$row | Add-Member -MemberType NoteProperty -Name 'ConfiguredValue' -Value $smosrv.Configuration.$($config.Name).RunValue

$output += $row

if($smosrv.Configuration.$($config.Name).IsDynamic -eq $false){$reboot=$true}

}

}

if($reboot){Write-Warning 'Altered configurations contain some that are not dynamic. Instance restart is required to apply.'}

return $output

}

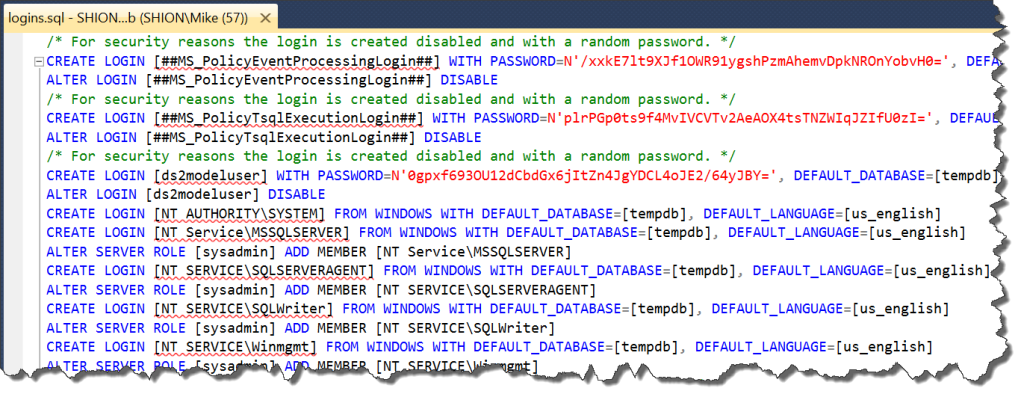

Because the function is built to accept a hash table of configurations, we can use the same pipe-delimited file (or any delimited file) to update the instance. Note the warning with the output. Because not all configurations are dynamic, the function will alert you if a non-dynamic configuration was changed. If you change a non-dynamic configuration, you will need to restart the SQL Service to complete the change.

Wrap Up

The last challenge is building out the configurations to check against. The third function I wrote will handle that, but I felt like covering that functionality would make this blog post to long. Next week I will cover the Get-SQLConfiguration function, additional techniques for extending these functions, and then tell you where you can get the code. Please note that this code is in a work-in-progress state, so use with caution. However, this also means that if you have any suggestions, I’d love to hear them so I can turn this into a functional tool that the community can use.

Thanks again to Andy for a great T-SQL Tuesday topic. Keep your eye on his invite blog post and the #TSQL2SDAY hashtag on Twitter for other great contributions.

I’m tweeting!

I’m tweeting!