The other day I was working with Doug Lane (b|t) over Twitter on an object visibility issue. He was trying to hide objects from linked ODBC selection to a SQL Server, particularly the system views in his database. I was able to help him out, but it got me wondering about exactly what allows you to see objects. I mean, I always knew I could see something if I had SELECT on it, but was that what really controlled the visibility?

I see you!

Seeing a table boils down to being able to view the metadata in the database, which means we must be given the VIEW DEFINITION privilege. This permission was new to 2005, as previous versions of SQL Server would allow any user in the public role to view object metadata. You can GRANT, REVOKE, or DENY this privilege on any of the four levels within SQL Server: server, database, schema, and object. Many actions implicitly grant VIEW DEFINITON, such as granting a user SELECT privileges to a table or EXECUTE on a stored procedure.

Let’s look at a couple examples. I’ll go ahead and create demo_login user on my test instance and add him to my database, but we won’t give him any permissions yet:

use msf_test create login demo_login with password='dummy'; create user demo_login for login demo_login;

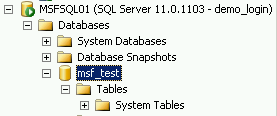

Now, in my test database, I have a table called demo1. My login, demo_login, hasn’t been granted any privileges to this table,so I can’t see the table in my object browser.

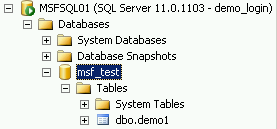

As soon as I grant a permission on it, like SELECT, the table appears:

grant select on demo1 to demo_login;

Again, this is because any GRANT on our table implicitly grants VIEW DEFINITION.

Now just like any any other privilege, we can explicitly DENY that privilege to a user and override (almost) any implicit grant. So, if we want to prevent a user from viewing the metadata on an object, but still allow him to select from it, we simply have to deny him VIEW DEFINITON:

deny view definition on demo1 to demo_login;

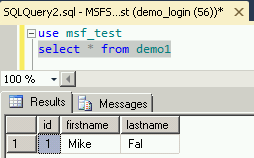

And even though I can’t see the object, I can still query it.

You can’t stop me! I’m invincible!

The caveat to explicitly denying permissions to a user is that three access levels can not be denied view permissions. These levels are:

- Server sysadmin role

- The user owns the object in question

- The database db_owner role (implied ownership of all database objects)

If any of these levels apply, then the user will always be able to see the object. To boot, the system views are a little tricky. I was unable to explicitly deny VIEW DEFINITION to either the INFORMATION_SCHEMA or the sys schemas and prevent them from being visible. This one will take a little more research, but in the interim I know that you can prevent them from being seen in a linked ODBC manager by explicitly denying select on individual system views.

To wrap up, the VIEW DEFINITION is the pivot point of visibility. If you have it, even by accident, you can see that object in any of the methods that list database objects (SSMS object browser, ODBC link manager, etc). There are some tricks to it, but it can be a useful tool if you have specific visibility and permission requirements.

I’m tweeting!

I’m tweeting!