A couple years ago I wrote a blog post on shredding query plans with XML. At the time, it was just an experiment. I investigated how to parse XML and a query plan without any real goal or purpose other than “what can I do with this?” Then I left it alone and didn’t come back to it.

At least not until recently. Fast forward a few years when I’m trying to tune a large SQL batch process that had a loop. It wasn’t a very pretty process and it worked, mostly, but it could definitely benefit from some performance love. The trick was trying to quantify the changes I made within. A common way to quantify this is to check logical reads. The lower your logical reads, the less “work” is typically done in your query. The trick was capturing all the executions within the loop and summing all the logical reads across a single batch execution.

At first I went to Plan Explorer. This is a great tool and had a lot of information, but what it was missing was the ability to sum up all my logical reads across the entire batch execution. I could look at each individual query, but to add these values up was going to be tedious and painful. Two things I hate.

At this point, I figured why not give PowerShell a shot? After all, I knew that the query plan was an XML doc and I could easily traverse that using the XML functionality built into the language. That combined with a little XQuery (which I’m terrible at, by the way) should solve my problem.

Armed with this knowledge, I charged ahead. Everything worked more or less as expected, but the one piece I missed from my previous blog post was using the XML namespace. See, you need the namespace so the XML pieces in PowerShell know what to query. I floundered with this for a bit until I found Jonathan Kehayias(@SqlPoolBoy) post on sanitizing query plans.

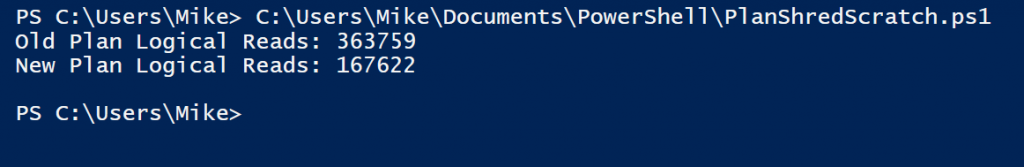

Once you have the namespace set, the rest becomes easy. To go with my previous example, the following statements allowed me to sum and compare logical reads across all statements executed in the batch:

#Read in query plan XML into XML objects

$old = Get-Content C:\Users\Mike\Documents\oldQueryPlan.sqlplan

$new = Get-Content C:\Users\Mike\Documents\newExecutionPlan.sqlplan

#Set namespace manager

$nsMgr = new-object 'System.Xml.XmlNamespaceManager' $old.NameTable;

$nsMgr.AddNamespace("sm", 'http://schemas.microsoft.com/sqlserver/2004/07/showplan');

#Query all nodes and then sum ActualLogicalReads

'Old Plan Logical Reads: ' + `

($old.SelectNodes("//sm:RunTimeCountersPerThread",$nsMgr) | Measure-Object -Property ActualLogicalReads -Sum).Sum

'New Plan Logical Reads: ' + `

($new.SelectNodes("//sm:RunTimeCountersPerThread",$nsMgr) | Measure-Object -Property ActualLogicalReads -Sum).Sum

Once the pattern is down, the use is pretty straightforward. There’s also more options accessible to you. If we just look at the RunTimeCountersPerThread node, we can compare other values such as Rows, Scans, and CPU time. We could really get crazy and extract all the different statements within the batch. There are numerous possibilities for analysis and review.

I’m not here to tell you that you should start using PowerShell to automate query tuning. Query performance is an art form and requires a lot of case-by-case analysis. However, like any great carpenter, it’s good to know the capabilities of your tool set. Understanding the options available to you not only helps you be more effective, but can also provide answers you may not have had access to.

I’m tweeting!

I’m tweeting!